Most voice agents apologise for not having that information. Ours give right answers and convert higher.

The Problem We Solve

Customer service teams waste hours searching through documents instead of helping customers. One of our manufacturing clients had 3,000+ equipment manuals across 30 years—staff constantly delayed responses while hunting for information.

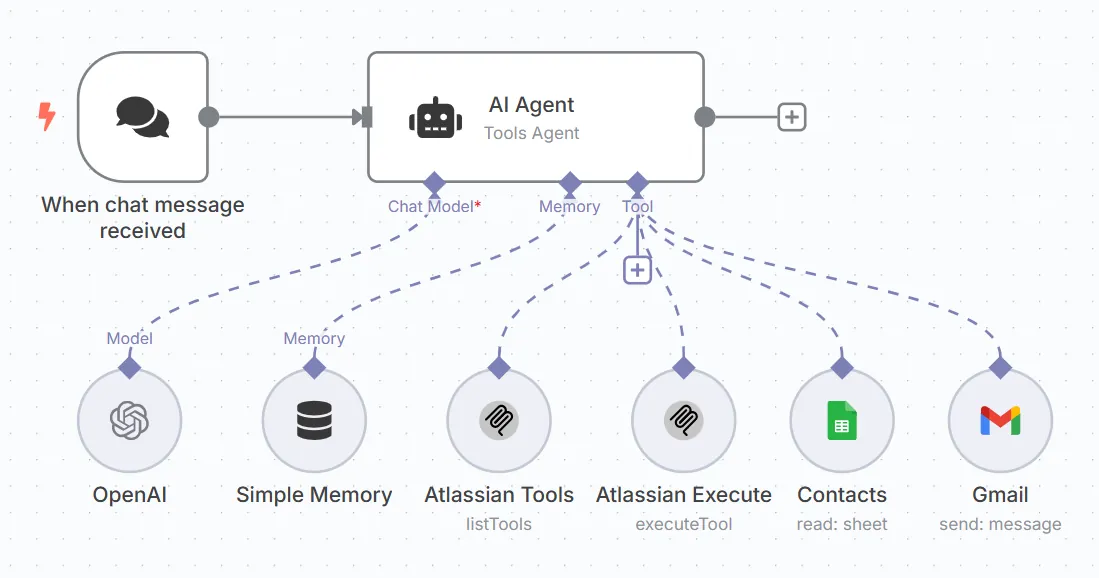

RAG Explained

Retrieval-Augmented Generation (RAG) combines three steps:

1. Retrieve - Find relevant information from knowledge bases

2. Augment - Enhance AI prompts with specific context

3. Generate - Create responses informed by both conversation and retrieved data

The system analyses conversation context, searches vector databases, and injects relevant content automatically—without requiring manual prompt engineering.

Real-World Application

Manufacturing Client Case Study:

Successfully Deployed Agents

Our agents manage:

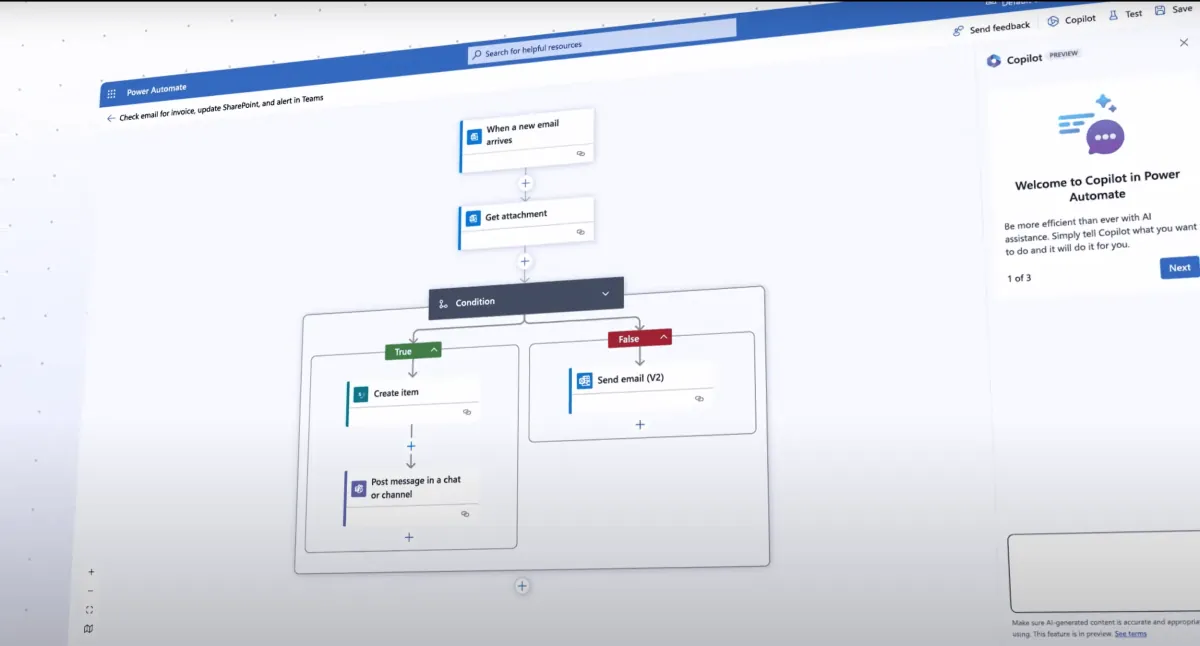

System Limitations & Solutions

Retell's Knowledge Base has defined limits:

Our Workaround: Create multiple themed knowledge bases linked to single agents, segmented by:

Optimization Strategies

To minimize latency (~100ms retrieval cost):

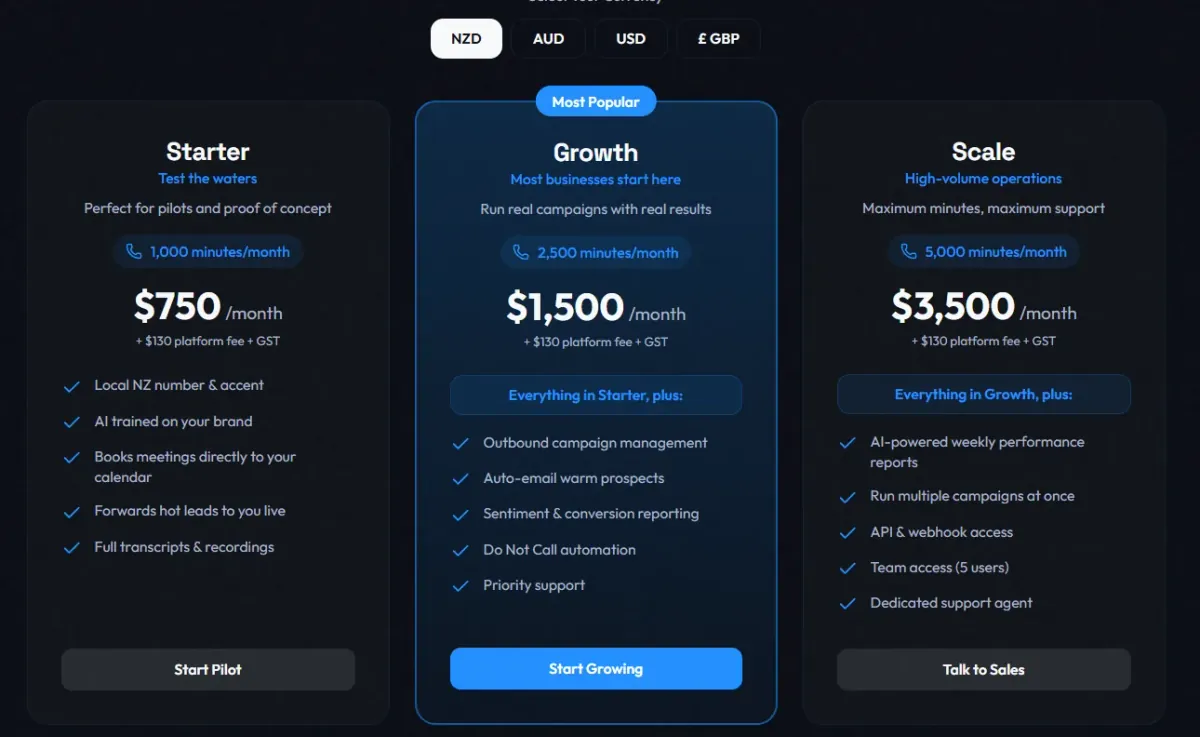

Enterprise Solution: Titan AI

Our Titan AI dashboard grants internal staff ChatGPT-style access to organizational knowledge. This enables:

Your documents become instant answers.

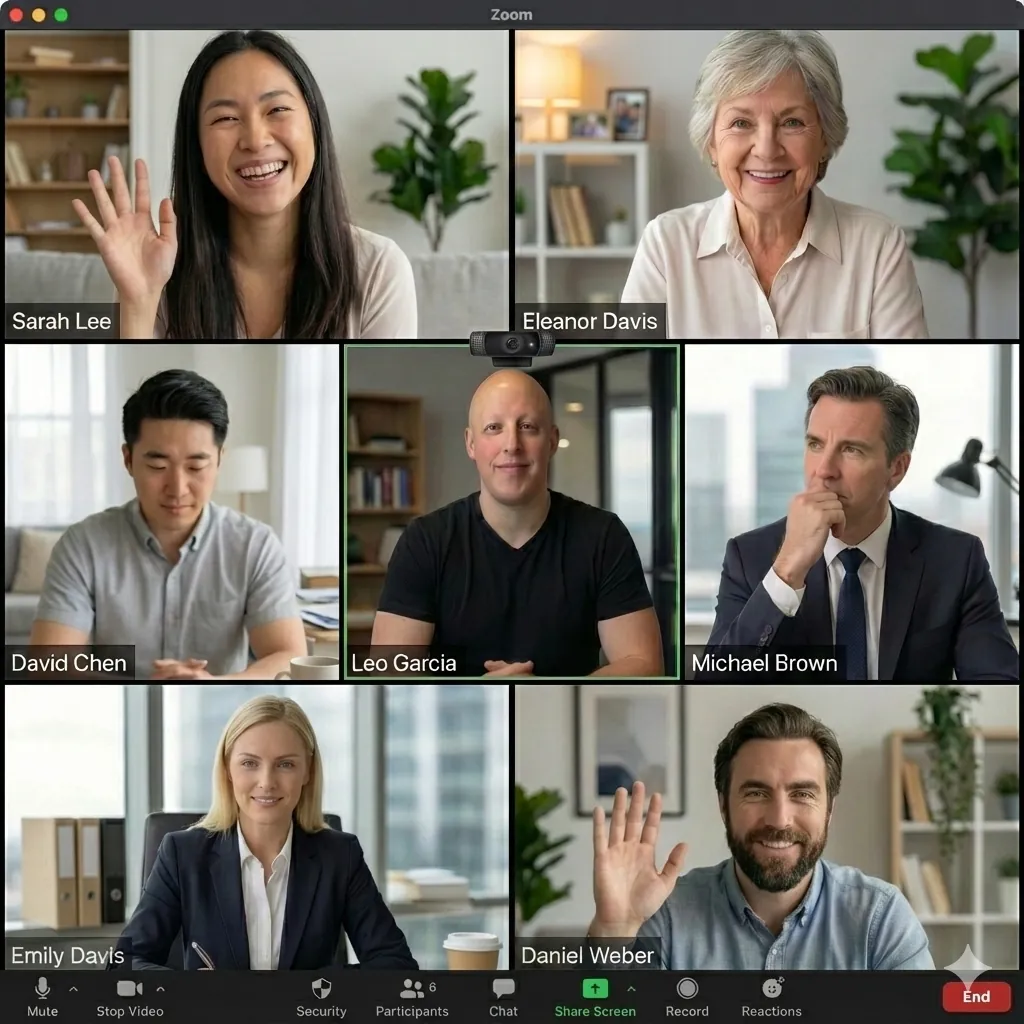

Leonardo Garcia-Curtis

Founder & CEO at Waboom AI. Building voice AI agents that convert.

Ready to Build Your AI Voice Agent?

Let's discuss how Waboom AI can help automate your customer conversations.

Book a Free Demo