Voice agents live or die by latency. A 2-second pause feels awkward. A 3-second pause feels broken. Users hang up.

Key Latency Sources

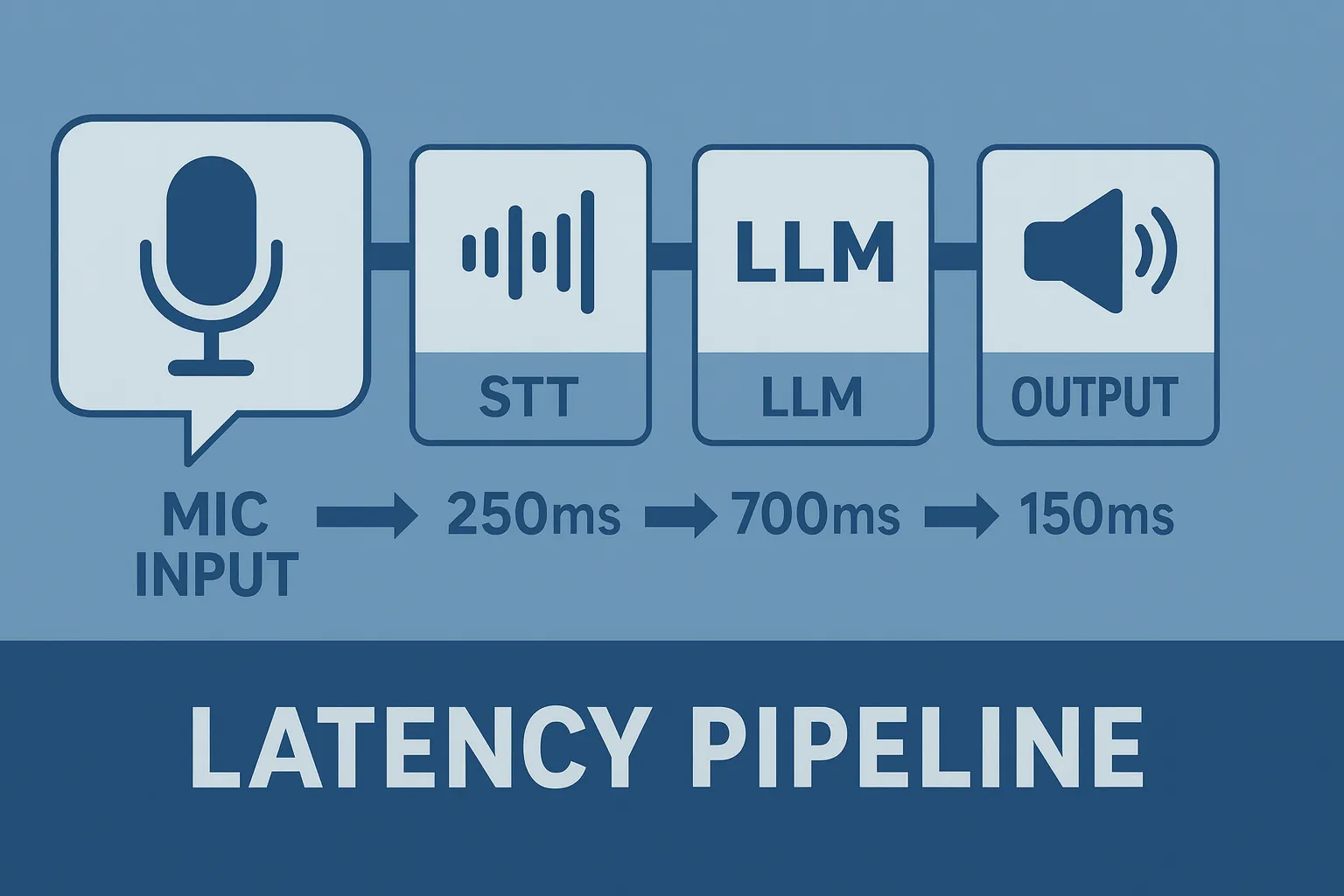

The complete latency stack has six main contributors to response delay:

1. Network round-trip time

2. Speech-to-text conversion

3. LLM processing (primary bottleneck: 500-900ms)

4. Text-to-speech generation

5. Knowledge base retrieval

6. External function calls

Primary Optimization Strategies

Use Retell's Fast Tier

Consistent performance with optimized routing and dedicated resources.

Optimize Prompts for Brevity

Before:

"Please provide the customer with a comprehensive explanation of our return policy, including all exceptions, timeframes, and the step-by-step process for initiating a return."

After:

"Explain returns: 30 days, original packaging, receipt needed. Exceptions: final sale items."

Implement Response Streaming

Start speaking while still generating the full response.

Knowledge Base Optimization

Industry-Specific Applications

Technical Settings

Monitoring Metrics

Track these critical metrics:

Perceived latency matters as much as measured performance. Fast feels trustworthy.

Leonardo Garcia-Curtis

Founder & CEO at Waboom AI. Building voice AI agents that convert.

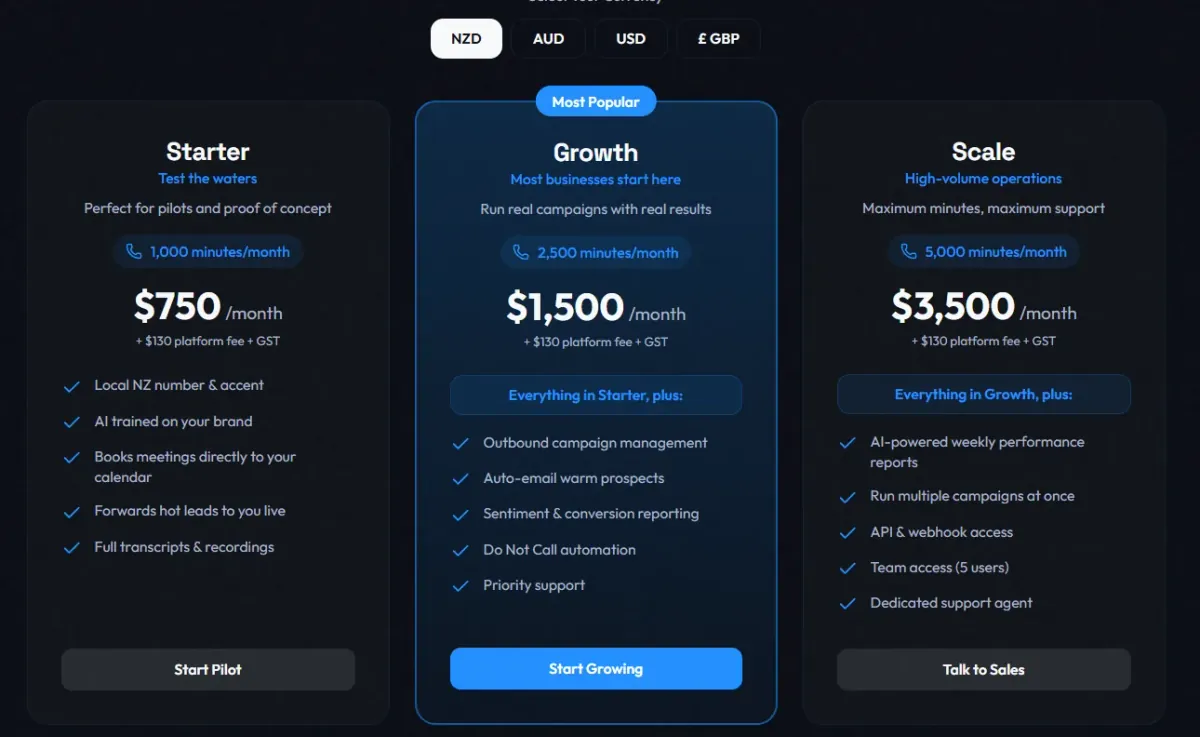

Ready to Build Your AI Voice Agent?

Let's discuss how Waboom AI can help automate your customer conversations.

Book a Free Demo