Most voice AI projects stop when the agent goes live. That's where we start watching.

The Launch Is Just the Beginning

Voice AI implementation doesn't end at launch—that's where optimization begins. At Waboom.ai, we've learned that "scale doesn't forgive sloppiness—it amplifies it."

A small issue repeated thousands of times becomes a brand problem. That's why human oversight remains critical.

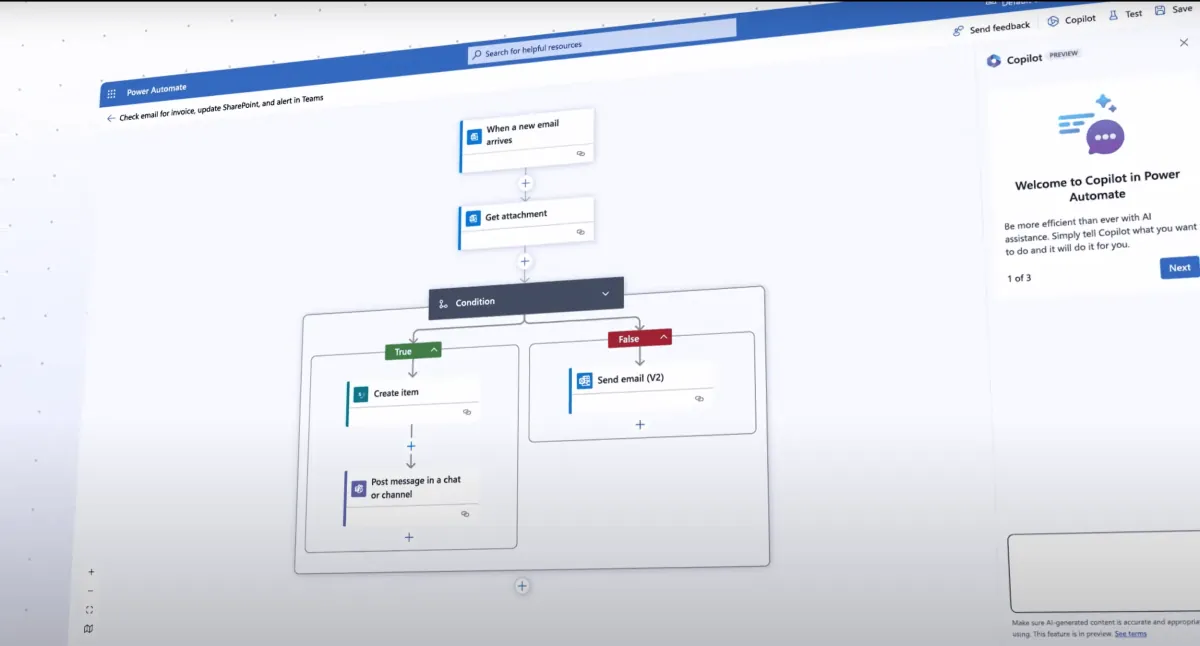

Performance Management, Not Maintenance

Our post-deployment approach is performance management, not passive maintenance. We focus on continuous improvement rather than reactive fixes—listening, reviewing, analyzing, and improving agent performance to achieve better business outcomes like faster conversions, refined messaging, and earlier customer support intervention.

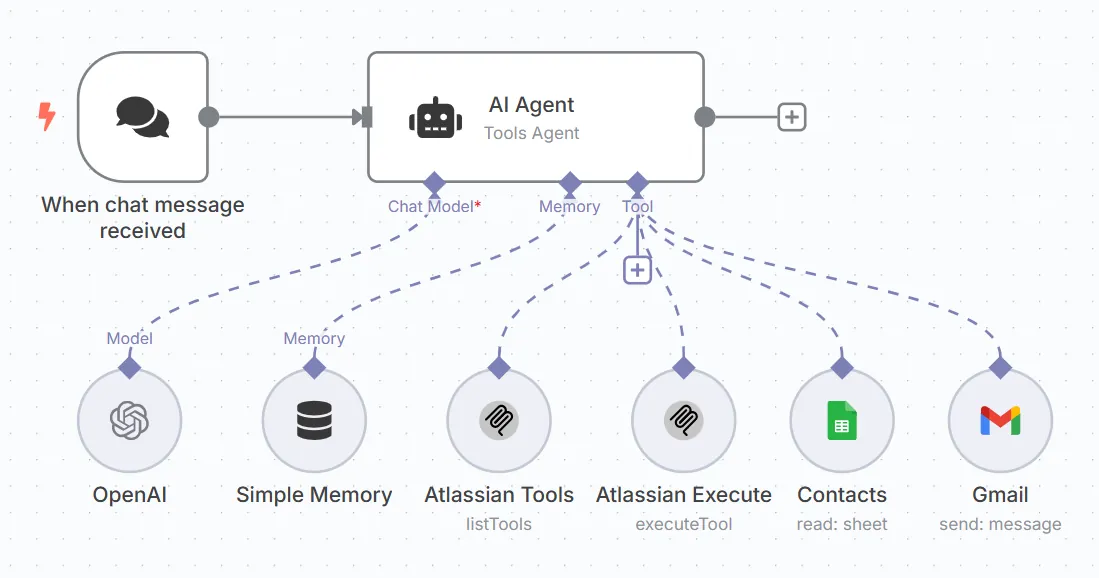

Automated Monitoring and Detection

Our systems automatically flag red flags including:

Surgical Performance Optimization

Rather than complete rewrites, we make surgical improvements. Here's an example:

Before:

"I understand you're experiencing an issue with your order. Let me look into that for you. Could you please provide me with your order number so I can access your account and see what's happening?"

After:

"I can help with that order issue. What's your order number?"

Simple. Direct. Faster. Improvements focus on specific friction points rather than wholesale rewrites.

Sarah's Weekly Optimization Loop

Meet Sarah, our Client Success Manager. Her week looks like this:

Dashboard Analytics

We leverage Retell.ai's platform to track:

Results

The Core Message

Human expertise combined with AI creates superior outcomes through continuous, data-driven refinement rather than passive monitoring. Your AI agent gets smarter every week—but only if humans are watching.

Leonardo Garcia-Curtis

Founder & CEO at Waboom AI. Building voice AI agents that convert.

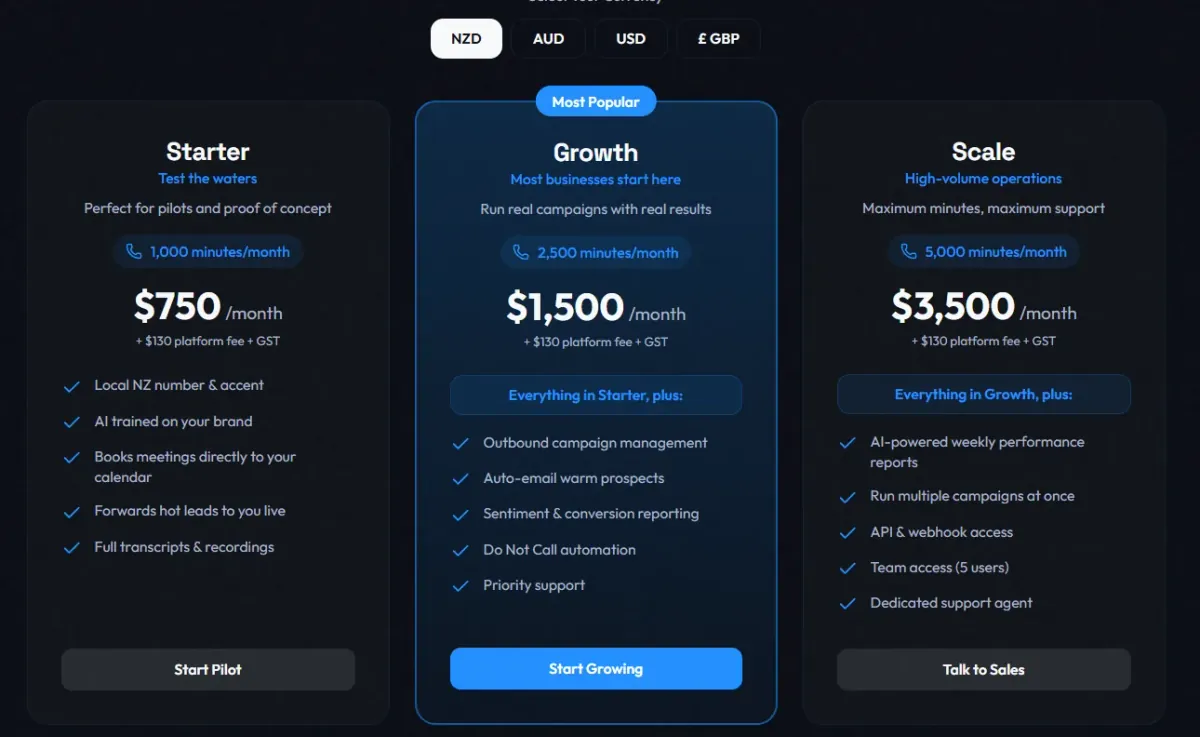

Ready to Build Your AI Voice Agent?

Let's discuss how Waboom AI can help automate your customer conversations.

Book a Free Demo