Voice AI should perform under pressure. At Waboom.ai, we simulate thousands of conversations to stress-test agents before launch.

The Problem with Manual Testing

Picture this: Your star developer just spent 3 weeks manually testing a single Retell AI voice agent through repetitive phone calls.

Typical Testing Cycle

That's 60+ hours per agent, per major iteration.

Why Manual Testing Fails

Three critical limitations:

1. Human inconsistency - Tester performance varies by time of day

2. Conversation fatigue - Reliability drops after 50+ iterations; testers miss edge cases after repetitive calls

3. Scope creep - Endless "what if" scenarios spiral uncontrollably

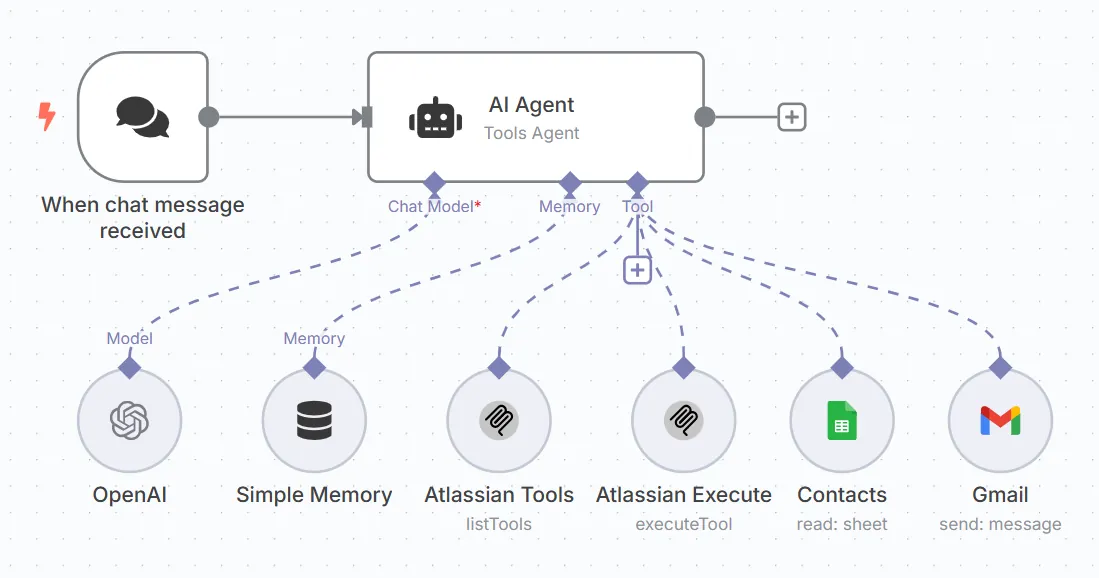

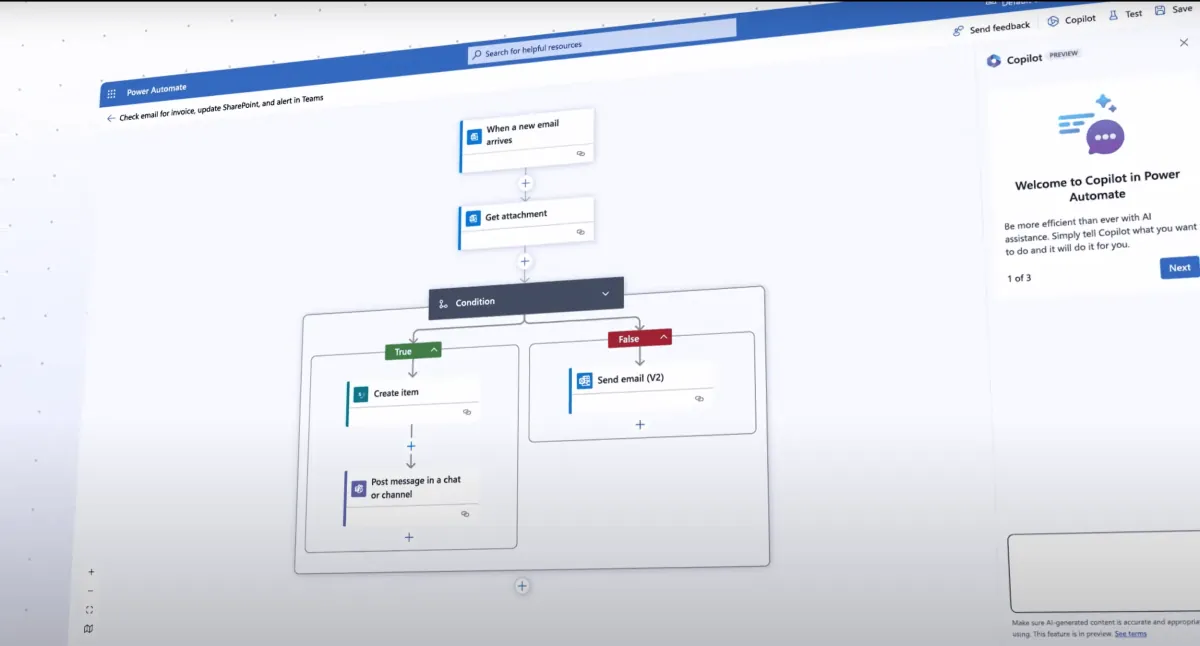

The Solution: Batch Simulation Testing

Retell AI's batch simulation testing enables simultaneous testing of 20+ scenarios.

The Workflow

1. Create detailed customer personas (frustrated executives, confused elderly customers, hurried professionals)

2. Define specific success metrics and measurable evaluation criteria for each scenario

3. Run comprehensive test suites in parallel (20+ simultaneous scenarios)

4. Analyze results and iterate

Results Achieved

Before Batch Testing:

After Implementation:

Current Limitations

The testing approach has constraints:

But the ROI is undeniable. Battle-tested agents earn customer trust from day one.

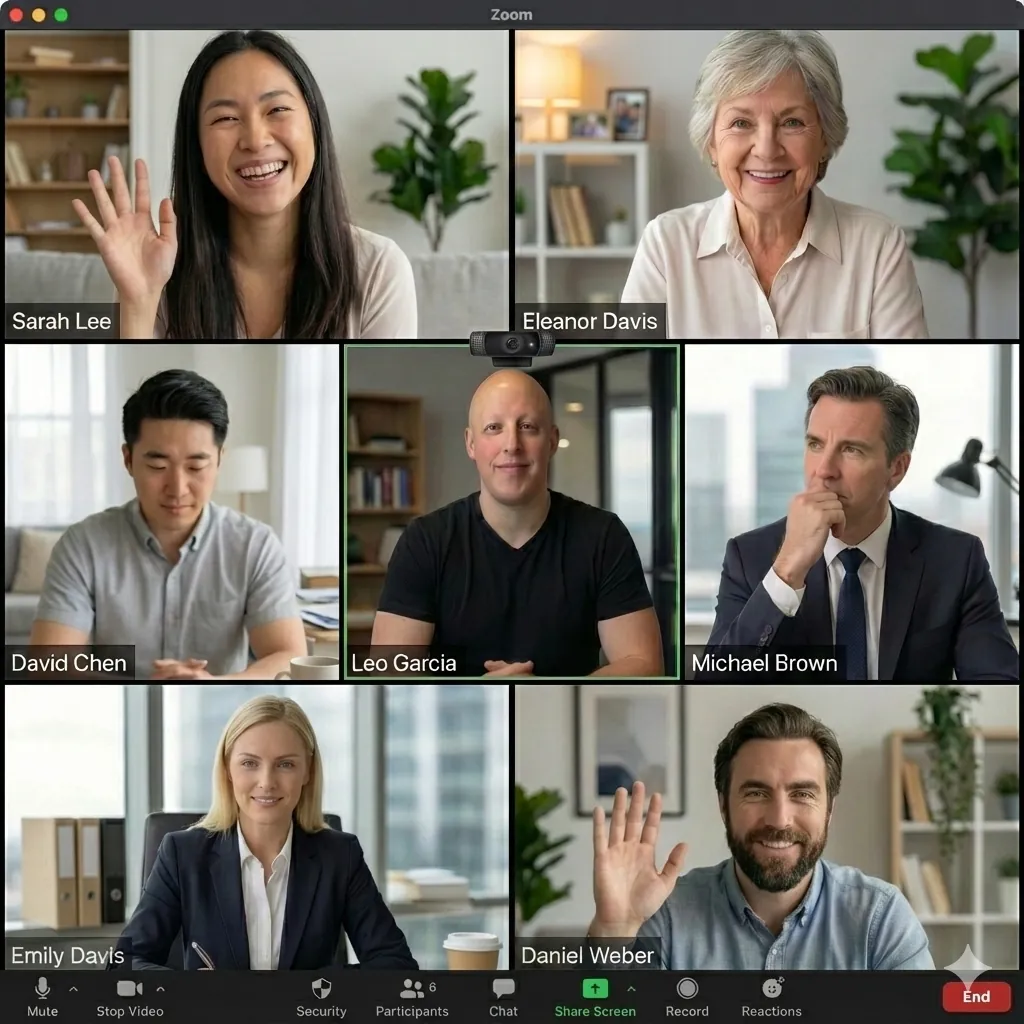

Leonardo Garcia-Curtis

Founder & CEO at Waboom AI. Building voice AI agents that convert.

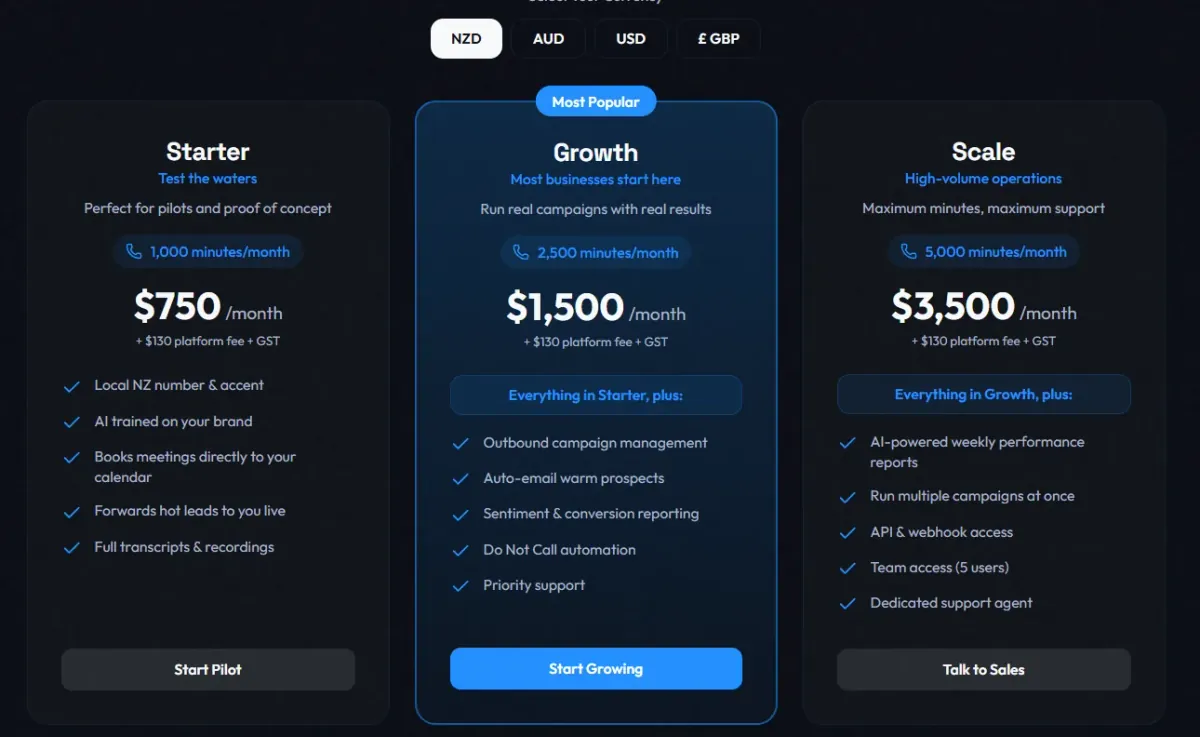

Ready to Build Your AI Voice Agent?

Let's discuss how Waboom AI can help automate your customer conversations.

Book a Free Demo